From Messy Records to Trusted Insights:

Entity Resolution for Wealth, Risk and AI

Post by Gurpinder Dhillon

Bad data is a silent tax that slows decisions, inflates costs, and erodes trust. Industry studies peg the average annual cost of poor quality data at about $12.9 million per organization, and knowledge workers lose roughly one day each week hunting for information instead of analyzing it.

Hosted by Neudata – a UK-based technology research and advisory firm that specializes in independent intelligence and consulting for the alternative data market – this webinar, From Messy Records to Trusted Insights, focuses on how buy-side teams operationalize entity resolution across onboarding, risk, and AI.

In the session, Dr. Gurpinder Dhillon, Data & AI Strategy Executive at Senzing, calls the $12.9 million figure “conservative,” based on interviews with hundreds of organizations during his doctoral research. Dhillon explains why fragmentation across messy, multilingual, multi-source records is the root cause of poor data quality, and how a rigorous entity resolution discipline creates a defensible, auditable identity layer that teams can actually use.

How Entity Resolution Works (a practical 5-step flow)

Entity resolution is the practice of accurately identifying, linking, and de-duplicating records that refer to the same real-world person, company, or asset across sources – and showing how a link was made, or why it was not. It’s an operational foundation for onboarding, risk, and AI that stands up to audit.

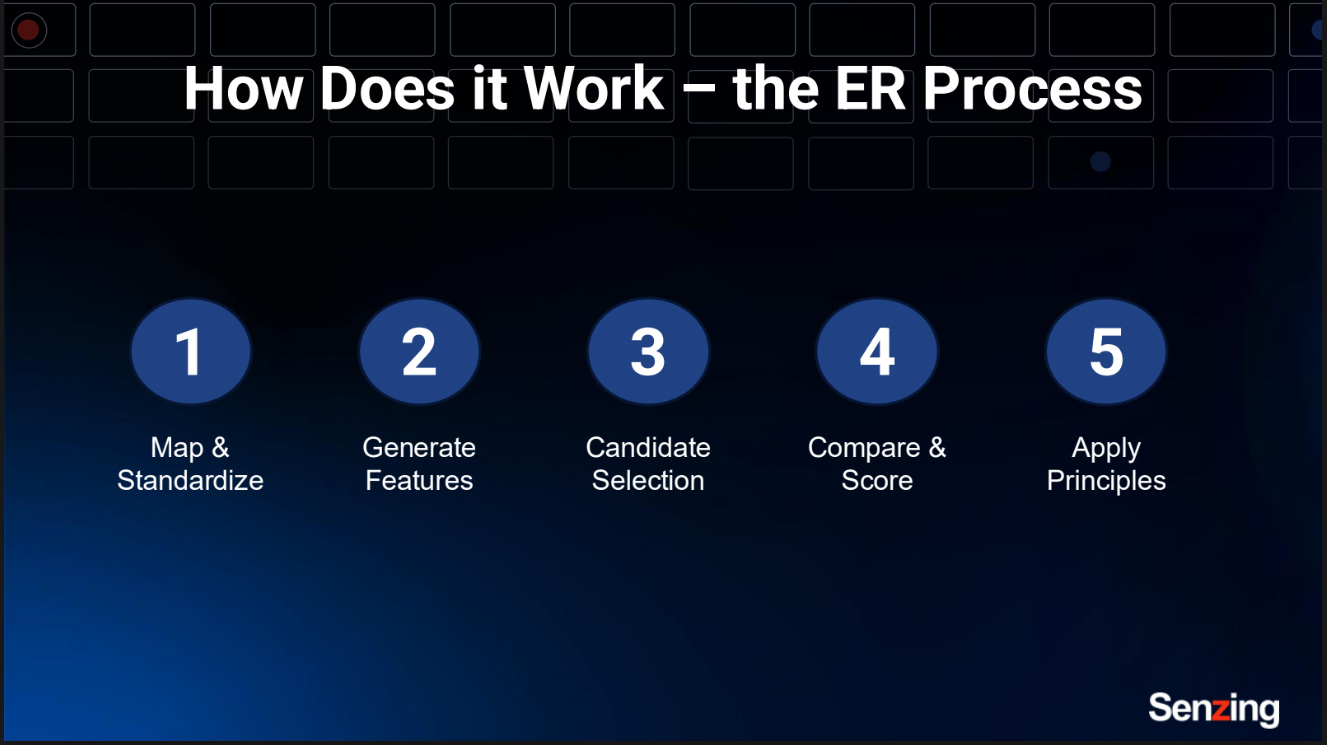

Dr. Dhillon walks through a five-step model that demystifies how modern entity resolution works from multi-source data ingestion to traceable, defensible decisions.

- Map & standardize: Ingest data from multiple sources, normalize formats, and prepare fields for matching.

- Generate features: Create matchable signals from names, addresses, phones, and other attributes.

- Candidate selection: Narrow billions of records to the most likely candidates for comparison.

- Compare & score: Apply multiple algorithms, produce probabilities and confidence levels.

- Apply principles with transparency: Produce an auditable artifact that explains every decision.

Top Four Client Use Case Questions

Neudata clients tend to ask four outcome-driven questions that are best solved using entity resolution:

- Can we onboard faster with fewer duplicates?

For onboarding & customer 360, collapse duplicates in real time, assemble a usable entity resume, and reduce manual effort. - Can we discover risk and hidden relationships earlier?

For risk, compliance, and fraud, expose non-obvious relationships, enable perpetual vetting, and preserve an auditable trail.

- Can we trust our unified investor fund view?

For wealth & fund 360, link investors, funds, custodians, and portfolios to reduce onboarding friction and accelerate KYC/AML. - Can we feed consistent entities to AI without rework?

Trusted data for AI is delivered via consistent, explainable entities to downstream models and analytics, reducing rework and clarifying outcomes.

Senzing Advantages

In the talk, Dhillon characterizes SENZING® software as a purpose-built, lightweight SDK for real time, explainable entity resolution that runs in the client’s environment (no data ever goes to Senzing, Inc., or any third party). Dhillon covers a few unique capabilities of Senzing that stand out for practitioners:

Entity Centric Learning™ Technology

Traditional record-to-record systems compare each incoming record to other individual records. Senzing Entity Centric Learning technology, by contrast, assembles everything known about an entity – names, addresses, and other identifying attributes – then evaluates new records against that evolving 360-degree profile. The approach also identifies relationships such as households and organizational structure to disambiguate lookalikes and provide richer context.

Teams simply map raw fields; the engine handles feature extraction, indexing, and scoring. This methodology adapts as new data arrives without requiring a hand-curated “golden” training set or manual tuning. The result is fast performance at scale and improved precision as data accumulates. As Dr. Dhillon puts it, “Every time a new record comes in, it doesn’t just look for a match against all those records—it looks for a match against existing entities, building a comprehensive entity resume that improves over time.”

Explainability By Design

Every match decision should come with its own “wire report” – an auditable artifact that shows exactly how records were linked and why records were not linked. As Dr. Dhillon explains, the report enumerates the matching features used, the attribute-level alignments (for example, name + date of birth + another identifier), the weights those attributes carried, and the resulting confidence score, which distinguishes high-confidence matches from low-confidence matches. It also captures and shows discrepancies and normalizations – think Rob versus Robert – so investigators can see what varied and why the system still judged the records as the same, or different.

That transparency underpins regulated workflows, forensic reviews, and model governance. Teams get a traceable explanation for each outcome, a narrative they can defend to auditors, and a practical way to diagnose false positives, tune upstream data quality, and feed trusted entity-resolved data into AI systems without guesswork. Dhillon also notes that Senzing output is designed to make it immediately clear how matches were formed and why certain items were not matched, supporting both compliance investigations and day-to-day analyst work.

Faster Mapping for Third Party Data

Many teams enrich first-party records with external sources. Dr. Dhillon outlines a pragmatic path: pre-built open-source mappers for Senzing data partners, so teams can load new data sources in hours, not days. This shortens time-to-value and keeps pipelines maintainable.

Improve Data Matching With Entity Resolution

The improvement is tangible. According to Dhillon, “With traditional matching, many teams top out around 70 to 75% resolution. When you augment your existing solution with Senzing, customers see 90%+ matching, which means better decisions from better data.”

If you’re wrestling with duplicate customers, fragmented fund hierarchies, or risky relationship blind spots, this session is worth a watch. It shows how to turn tangled source records into a clean, auditable identity layer and gives you practical steps to get started.

Gurpinder Dhillon

Head of Data Partner Strategy & Ecosystem

Gurpinder Dhillon has over 20 years of experience in data management, AI enablement, and partner ecosystem development across global markets. Gurpinder is also a published author and frequent keynote speaker on AI ethics, master data strategy, and the evolving role of data in business innovation. He currently leads the strategic direction and execution of the Senzing data partner ecosystem.